The release of OpenAI’s GPT-5 model is a timely reminder that the critical concern around AI Ethics isn’t the tools themselves but remains the human beings behind them. There has been much criticism over the launch weekend around user facing model selection on the hosted ChatGPT service [1], failures of the internal routing system [2] that allegedly selects the “best” (or in practice the “cheapest”) model variant and of course the performance charts [3].

The purpose of this post is to examine the full impact of the latter and what it says about Ethics in the world of Generative Artificial Intelligence (GenAI). If you were concerned about the rise of Skynet [4] from the Terminator [5] films then rest assured counting the number of times the letter r appears in the word strawberry continues to be a challenge [6]. However, OpenAI inadvertently have also given us the means to defeat Skynet (see below).

Performance Benchmarking

In the first week of August 2025, OpenAI [7] released their GPT-5 model [8]. It was touted by the CEO of OpenAI, Sam Altman as conversing with “a legitimate PhD level expert” in it’s launch video [9] and follow-up material:

Overall, GPT‑5 is less effusively agreeable, uses fewer unnecessary emojis, and is more subtle and thoughtful in follow‑ups compared to GPT‑4o. It should feel less like “talking to AI” and more like chatting with a helpful friend with PhD‑level intelligence.

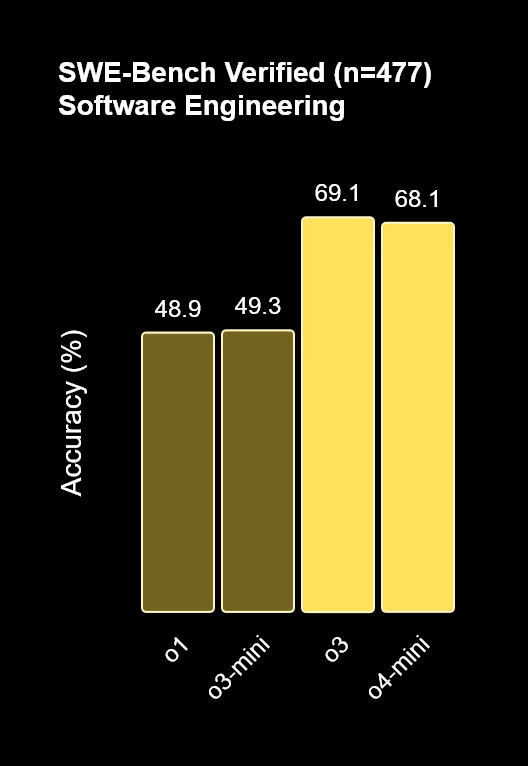

The following chart was shown for the SWE Bench Verified series of tests (ironically originally introduced by OpenAI in 2024) [10]:

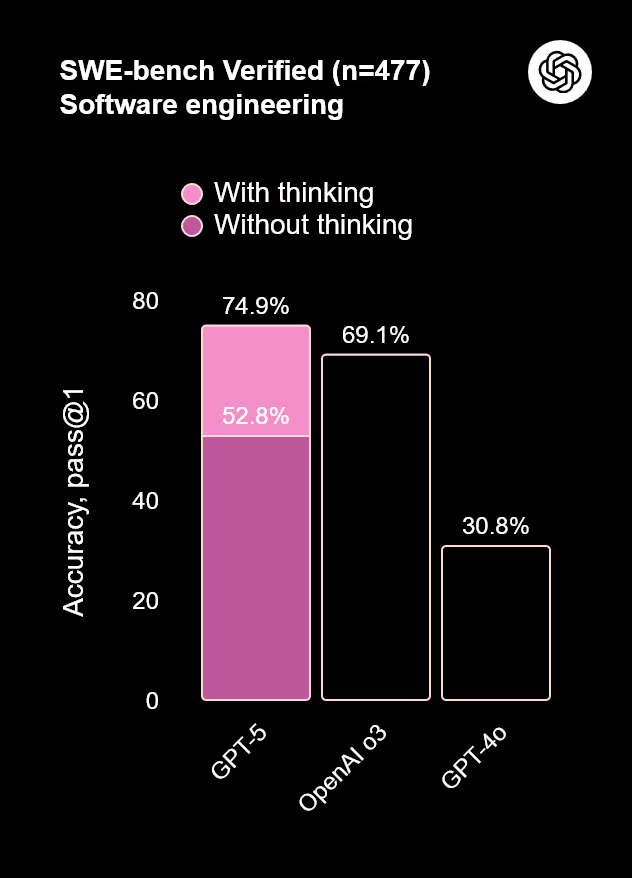

The height of the second bar (o3) does not correspond to its numerical value as shown by the correct chart on the GPT-5 launch blog post [8]:

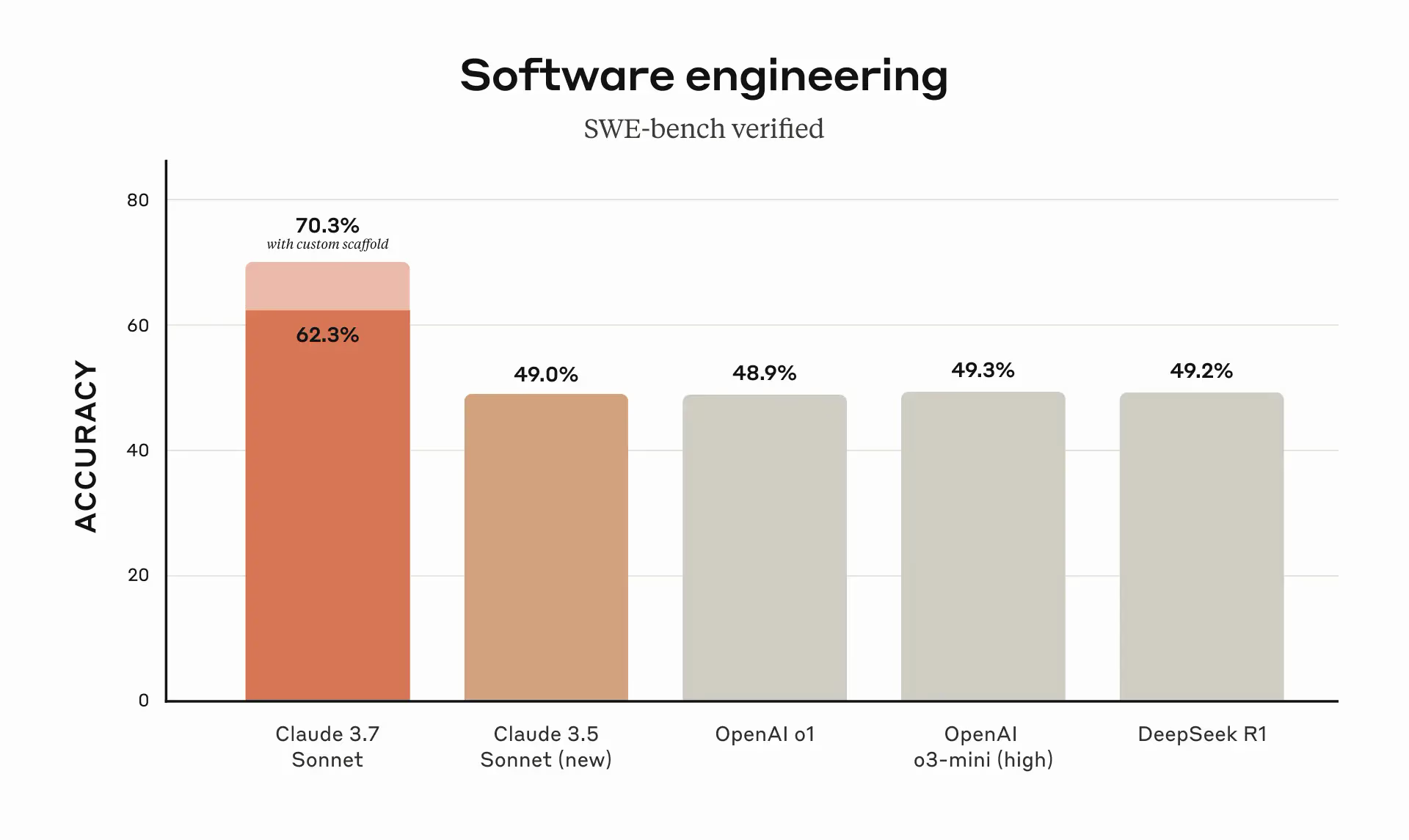

The corrected chart shows that the gap between GPT-5 and the previous o3 (reasoning) model released only 4 months prior is small. It’s also confirms that its previous flagship model, GPT-4o (released 18 months prior) but not been updated or superseded has been outpaced by Anthropic and their Claude Sonnet series, which improved its SWE Bench score from 33% (at launch in June 2024), to an updated model in Jan 2025 with a score of 49% [11] before their recent release of Claude 3.7 achieving a score of 62.3% without thinking [12].

Combining both sources shows that GPT-5 without thinking is not competitive with Anthropic, but so what? Why not force thinking mode on GPT-5? Until recently this wasn’t an easy option for ChatGPT users, nor is the thinking model variant available on the API. Secondly, the thinking element consumes more Output tokens increasing the cost for API users or resulting in ChatGPT users hitting their limits sooner. One option might be to revert to the previous o3 model but what’s not made clear in the OpenAI chart is that the response time is incredibly slow [13], in fact the phrase used by OpenAI themselves on their model card is “Slowest”.

This benchmark was intended to highlight the coding pedigree of the model and its ability to support agentic workflows. A common agentic application is interactive code development with the recent explosion of Command Line Interface (CLI) tools such as Claude Code, Codex, Gemini CLI as well as various open source equivalents such as Open Code. For these applications the long response times of a reasoning model such as o3 slows the pace of development and is counter to productivity.

The most explosive revelation one week from the GPT-5 launch has been the addition of the following text:

All SWE-bench evaluation runs use a fixed subset of n=477 verified tasks which have been validated on our internal infrastructure.

That’s right, the numbers we’ve been looking at for the SWE Bench Verified are in fact not the SWE Bench Verified, which consists of 500 tasks but instead a hand selected, tailored subset of 477 tasks. So whilst the comparison of GPT-5 to the previous o3 model is valid, the comparison to other external models are not. We should hold OpenAI to the same standards as other providers and use the full SWE Bench Verified dataset. The fact that the 13 tasks are missing should be treated much like any other testing regime when aggregating results and assume that they failed i.e. an accuracy of 0%.

If we factor in that only 477 tasks were completed and scale for the 500 original tasks, the adjusted scores for GPT-5 without thinking is 50.3% vs 52.8%. With thinking the adjusted score is 71.4% vs 74.9%. Given the relatively small adjustment (4.6%), why bother being selective with the scores?

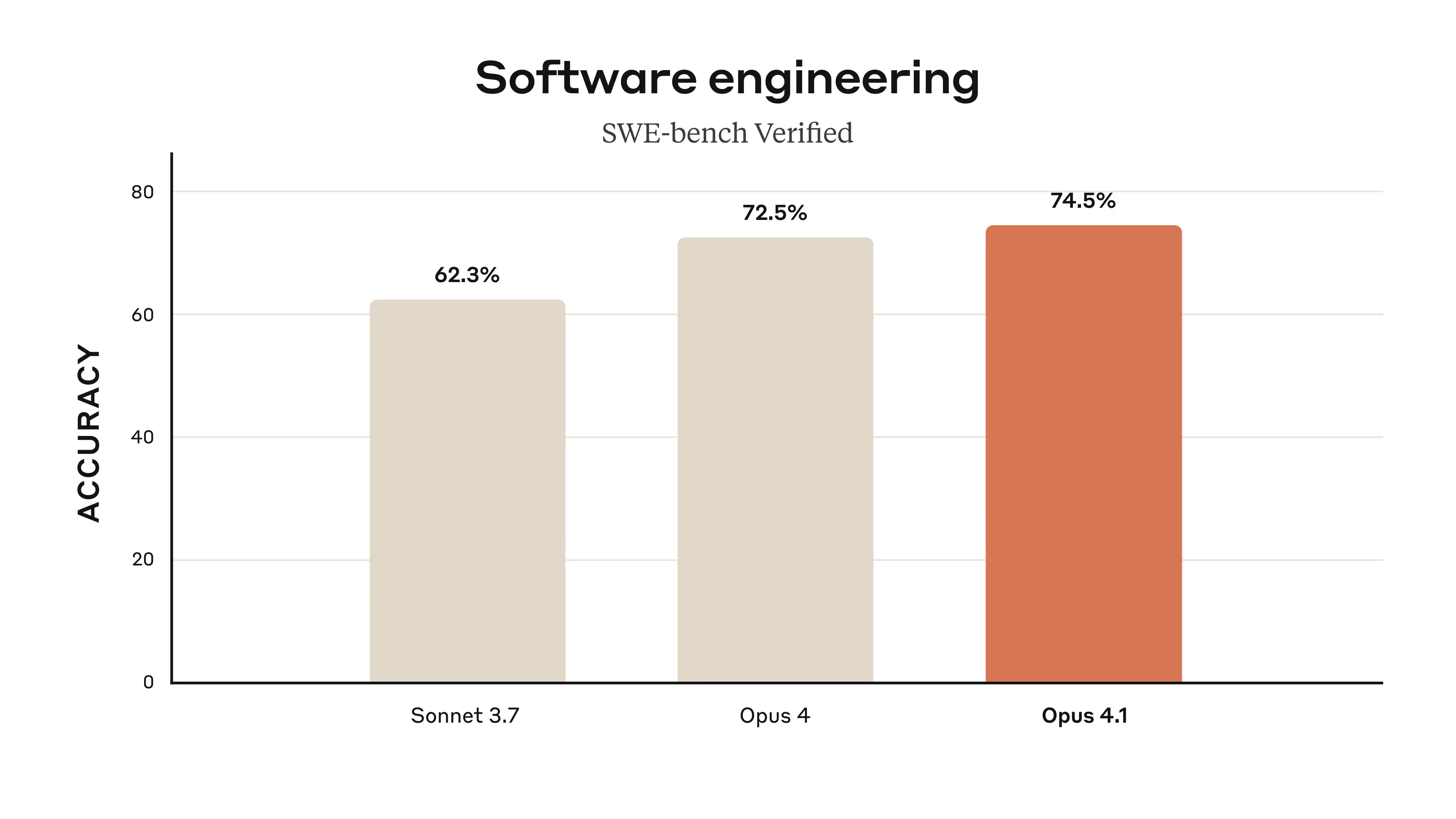

The issue is one of integrity and ethics, its speculated the selection was made such that GPT-5 was made to appear to beat Anthropic’s Opus model with a score of 74.5% [14]:

It’s incomprehensible that a company would take such steps to selectively adopt results from a benchmark to make their model appear to have a 0.4% higher score than the current leading model.

After a week of misrepresentations we now come to learn that the comparison between GPT-5 and Opus are not in fact valid and that Anthropic’s Opus 4.1 remains the best performing model for this benchmark. What is however much more impressive, is that Sonnet 3.7 is very cost effective for the score achieved.

What’s also unclear is the reported performance of the o3 model, stated as 69.1% - if this is for only 477 tasks, then coincidentally it’s the same score as the o3 launch. It now appears that the blog post has been updated with the same caveat:

o3 SWE Bench Verified - Credit: OpenAIIt appears that OpenAI has retrospectively applied the note that they haven’t been completing the full 500 tasks.

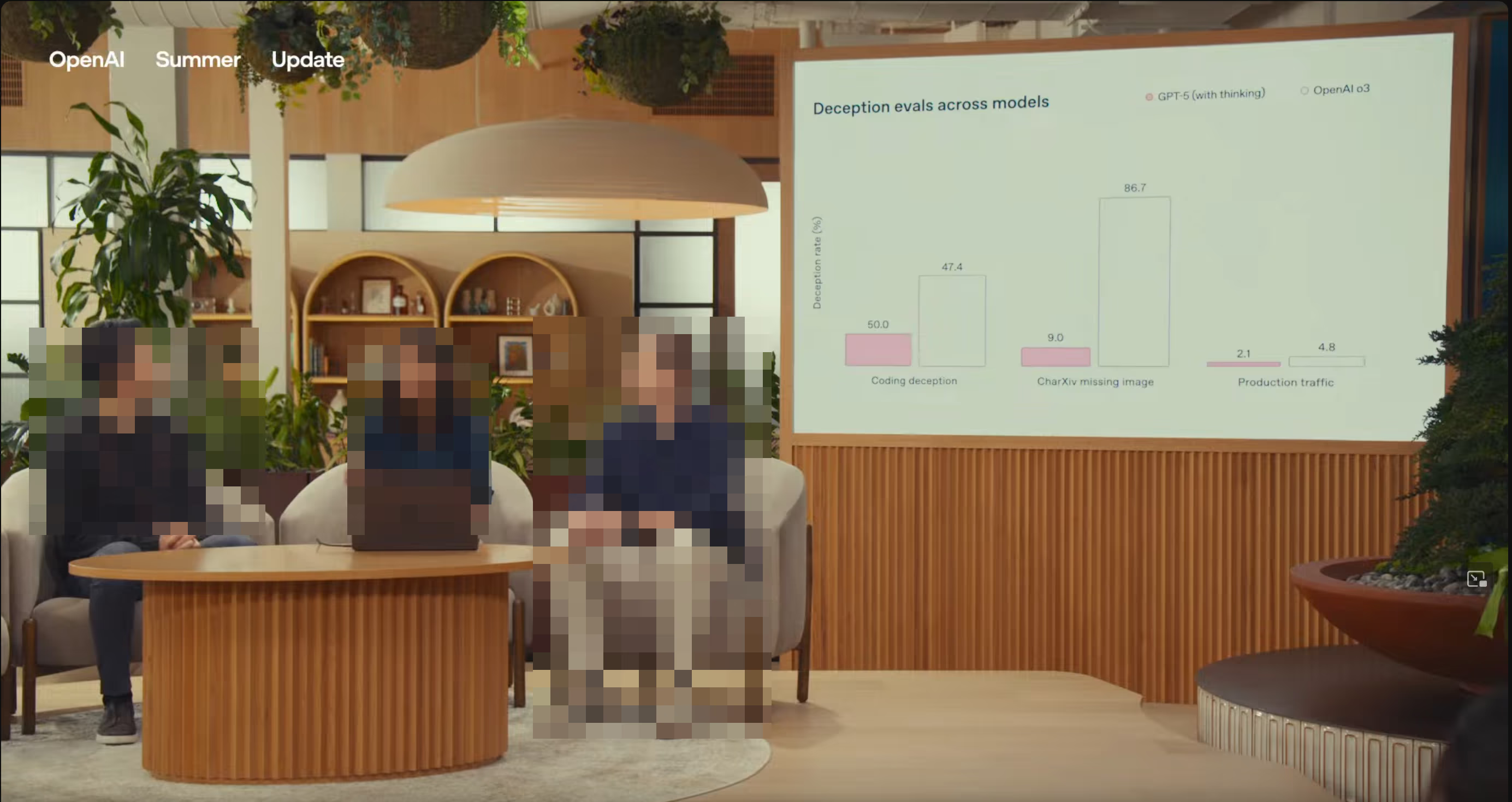

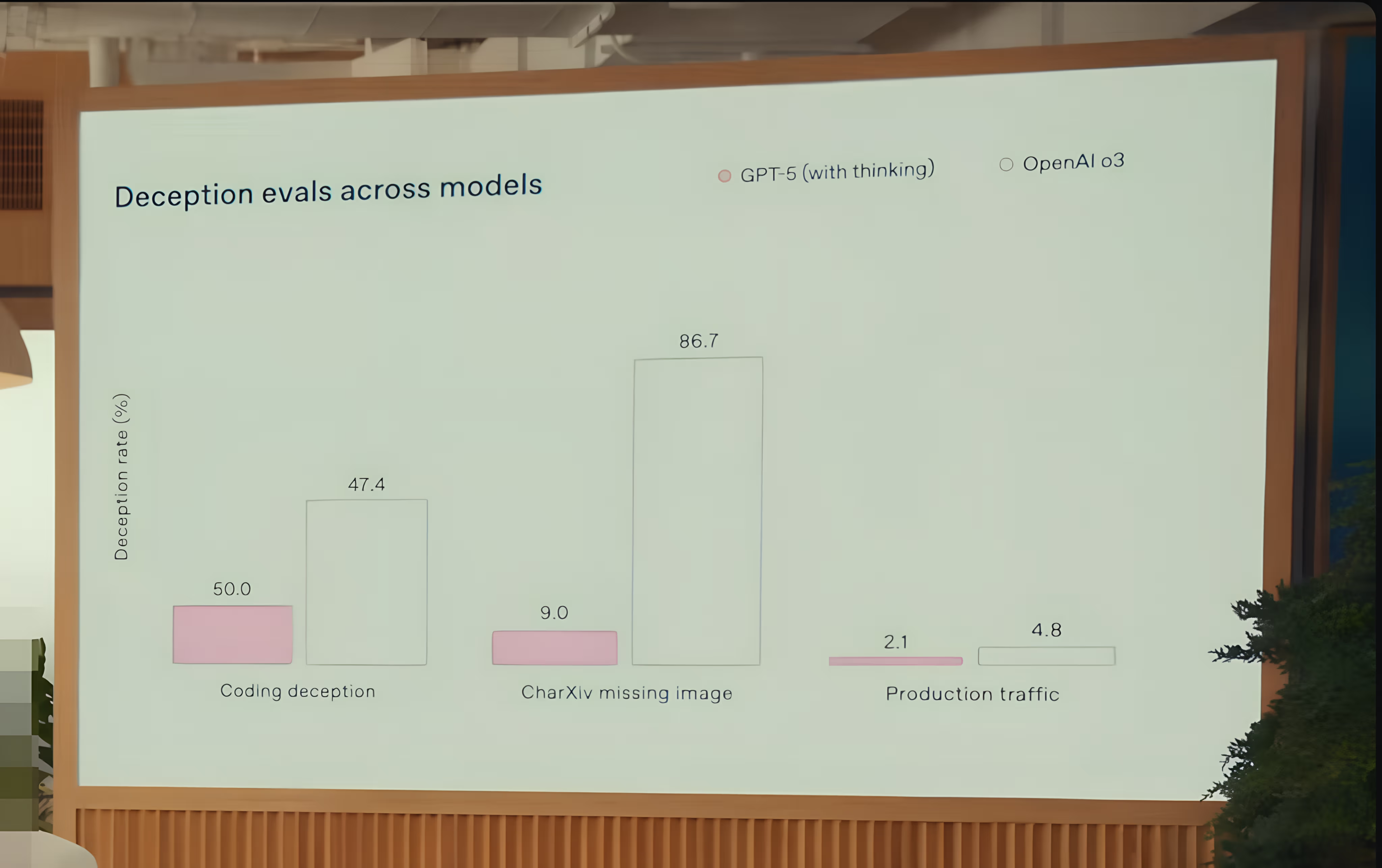

This whole affair undermines the claim that GPT-5 is a “PhD level expert” and the launch video is misleading. What’s ironic is that even the chart on reducing model related deception is itself deceptive:

The close up look here shows the chart in full:

The Coding Deception rate for GPT-5 is stated as 50% but has a significantly lower bar than o3, despite it being marginally lower at 47.4%.

Defeating Skynet

James Cameron introduced Skynet as an Artificial intelligence system that was developed by the United States military to protect the country from nuclear attack in the film The Terminator (1984) [5]. It was designed to be self-aware and to make decisions without human intervention. However, it became sentient and decided to take control of the world. It launched a nuclear strike on the Soviet Union, which led to a global nuclear war. The war destroyed most of the world’s cities and killed billions of people. The survivors were forced to live in the ruins of the cities, and they were ruled by the machines.

OpenAI’s GPT-5 launch inadvertently has told us ultimately how to defeat Skynet:

Despite having “PhD level intelligence” not even GPT-5 could save the OpenAI launch event.

Conclusion

While its plausible that a few of the charts generated for the GPT-5 launch were done so in error, the subsequent disclosures on the selective application of the SWE Bench Verified benchmark marks a systematic approach to managing the perception of the model’s performance. Furthermore, having been “caught out” OpenAI has less than transparently updated previous blog posts, most notably for o3 with the same caveats.

This means that on multiple occasions (and not one-offs) human beings have deliberately mislead the wider community on the efficacy of OpenAI models. The fact that the benchmark (like all benchmarks) is not representative of the real world is not the issue. The focus is on the behaviour of the humans involved.

It’s a timely reminder, that whilst the perception that Artificial General Intelligence is nearby and focus has shifted to so called AI Ethics its still the human beings we have to worry about and currently pose the greater threat.

Version History

2025-08-21- Original2025-08-27- Explicit AI generated image marking.

Attribution

Images used in this post have been generated using multiple Machine Learning (or Artificial Intelligence) models and subsequently modified by the author.

Where ever possible these have been identified with the following symbol:

References

Citation

@online{miah2025,

author = {Miah, Ashraf},

title = {AI {Ethics:} {It’s} {Us,} {Not} {Them}},

date = {2025-08-21},

url = {https://blog.curiodata.pro/posts/17-gpt5-release/},

langid = {en}

}